Abstract

This article proposes a method based on perceptual hashing for identifying duplicate images. This method begins by converting color images to grayscale and then utilizes bilinear interpolation to resize the images, enhancing their consistency and insensitivity to scale. Subsequently, we employ the discrete cosine transform (DCT) to extract low-frequency information from the images, capturing their structural characteristics.We implemented the components using the Swift programming language and tested them using a local image database that contains a large number of images, resulting in impressive findings. The article presents the implementation details of the method. Future work can focus on further enhancing the accuracy and robustness of the method to address more complex variations in images.

Introduction

Starting from macOS Ventura, Apple has introduced a new feature1 for its Photos app -- Duplicate Image Detection. As I am currently enrolled in a digital image processing course this semester, I am very curious about how it is implemented, or rather, how to solve a problem like finding similar images.

This article is a summary of the content I learned in the digital image processing course. I hope it is interesting and can be helpful to those who are currently studying or planning to study in this field.

The truth is, because macOS and the Photos app are closed-source, we are unable to know the exact algorithm they utilize. However, based on the output results and the system's behavior, it appears to be a variant of a perceptual hashing algorithm.

Perceptual Hashing

The Perceptual Hashing2 algorithm is capable of transforming an image into a fixed-size binary integer known as a hash value. This hash value captures the visual characteristics of the image, such that similar images have smaller differences in their hash values.

The key to the Perceptual Hashing lies in its insensitivity to image variations. Even when there are slight changes like size, rotation, flipping, or the addition of noise, the hash value remains relatively consistent. As a result, by comparing the hash values of two images, it is possible to determine their similarity promptly.

In comparison to cryptographic hash functions3, this is simply another extreme. The Perceptual Hashing typically disregards security and instead focuses on preserving the relative characteristics of images. Cryptographic hash functions, on the other hand, map data of arbitrary length to fixed-length hash values and possess the following characteristics:

- Uniqueness: Different inputs should generate different hash values.

- Collision resistance: It is extremely difficult to find two different inputs that produce the same hash value.

- Even slight variations in data should result in significant changes in the output.

Comparing two SHA5124 hash values really only tells you two things. If the hashes are different, then the data is different. And if the hashes are the same, then the data is likely the same. In contrast, perceptual hashes can be compared -- giving you a sense of similarity between the two data sets.

The basic principle of Perceptual Hashing is to convert an image into a grayscale image and performing a series of preprocessing steps, such as resizing. Then, a specific mathematical function (typically Discrete Cosine Transform) is used to convert the image into its spectral representation. Finally, the hash value is calculated based on the spectral representation.

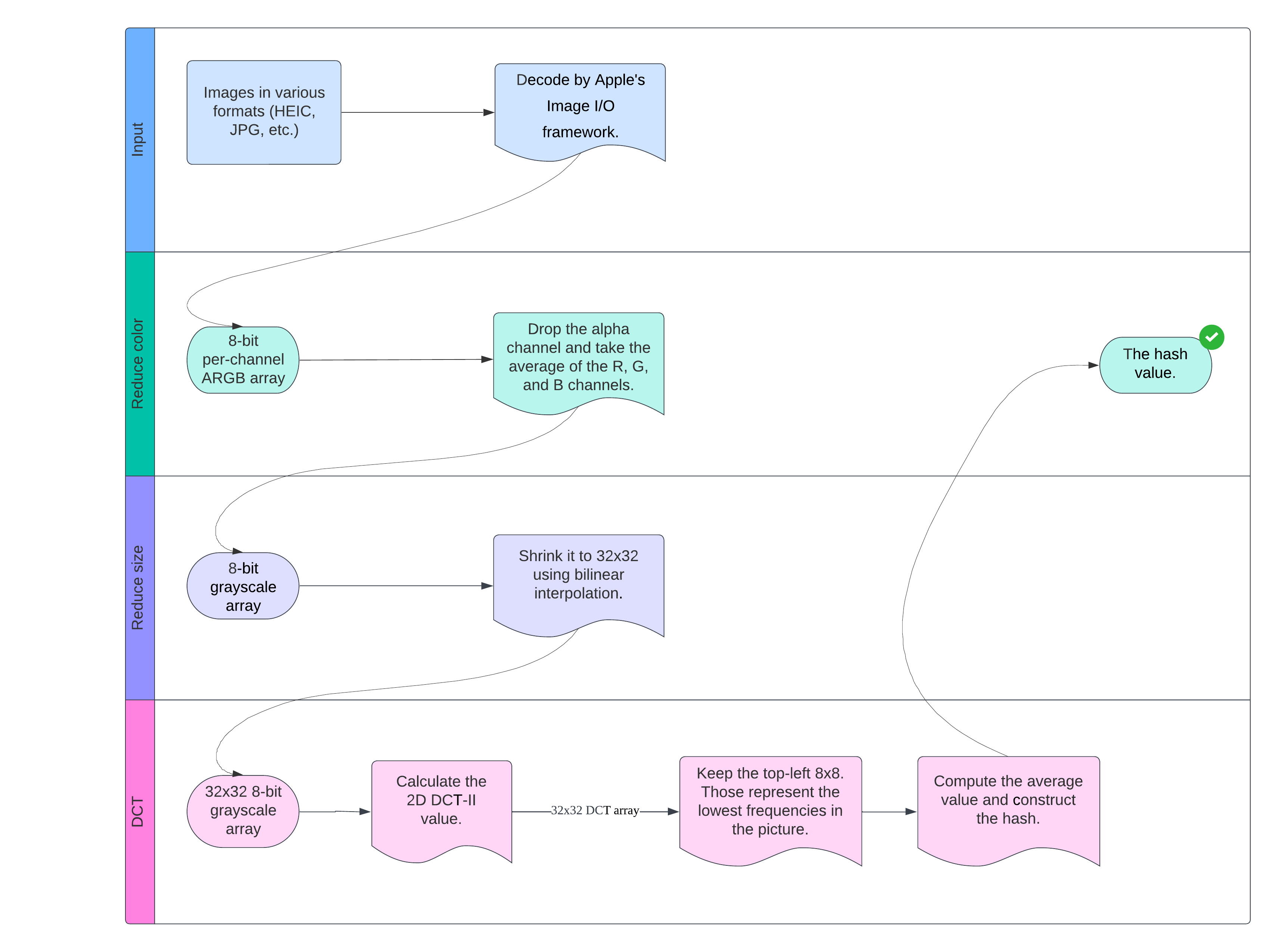

The complete program framework is shown in the following figure.

Next, we will describe the detailed implementation of each step.

Image decoding

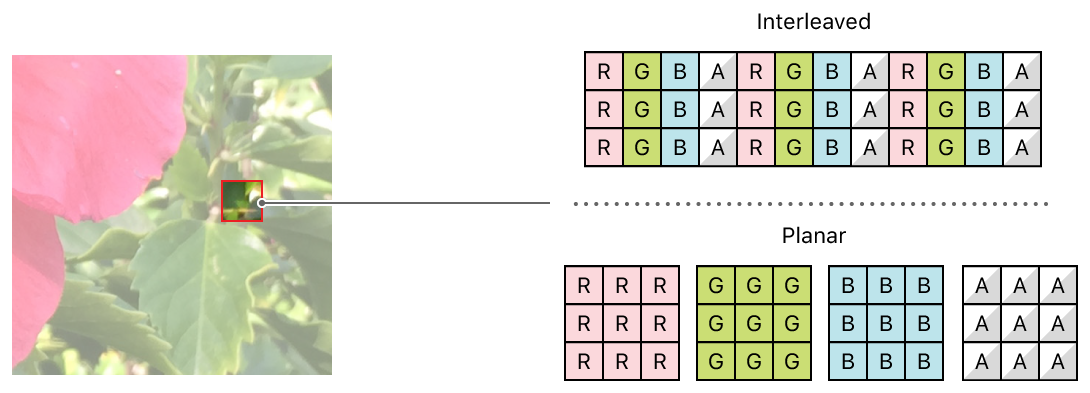

We operates on image data with two memory layouts:

- Interleaved stores each pixel's color data consecutively in a single buffer. For example, the data that describes a 4-channel image (alpha, red, green, and blue) would be stored as

ARGBARGBARGB… - Planar stores each color channel in separate buffers. For example, a 4-channel image would be stored as four individual buffers containing alpha, red, green, and blue data.

Image source: Apple Developer Documentation.

Since operating on a single color channel at a time can simplify our program (allowing the use of the same processing function for different channels), the Planar layout is used here.

However, images stored in files are often compressed or encoded, and they are not in a format that we can directly access. Therefore, our first job is to decode the images into the pixel format we need. Considering that image encoding and decoding are not the focus of this article, we will use a library called image-codec that I have previously implemented. This library wraps the required code for invoking Apple's Image I/O library into two simple functions, significantly reducing our workload. Using the built-in system library has the following advantages: Image I/O supports a wide range of image formats, including standard web formats, high dynamic range images, and raw camera data. It is also the fastest image decoders and encoders for the Mac platform.

Reduce color

We consider different color versions of the same image as similar images. In other words, our program is not sensitive to colors, allowing us to convert color images into grayscale images. In a grayscale image, each pixel's value represents its brightness, without any color information.

Grayscale images use a single channel to represent brightness, unlike color images that employ multiple channels for red, green, and blue color components. As a result, grayscale images have much smaller data sizes compared to color images, reducing computational complexity.

In our implementation, after the image goes through the decoder, we obtain a four-channel, 8-bit per channel ARGB matrix. By discarding the Alpha channel and averaging the RGB channels for each pixel, we derive the grayscale value. This process involves summing the values of the red, green, and blue channels for each pixel and dividing the sum by 3. As a result, this step produces a grayscale image of the same dimensions as the original image but with a single channel.

Reduce size

After obtaining the grayscale image, theoretically, we can directly use the Discrete Cosine Transform (DCT) introduced in the following text to extract the frequency-domain characteristics of the image. However, in practical applications, this approach leads to two problems:

- As mentioned below, the time complexity of performing a naive 2D DCT on an image is $O(h^2w^2)$, where $h$ is the pixel height of the image and $w$ is the pixel width. Considering that most smartphone-captured images have pixel resolutions ranging from 12MP to 48MP, with a default aspect ratio of 4:3, the computation time required to calculate the DCT values of a complete image becomes unacceptable.

- Performing DCT on the full-sized image also implies that our program is sensitive to variations in image dimensions, which is not desirable. We want the program to recognize slightly resized versions of the same image as similar images.

Therefore, it becomes necessary to resize the image. Specifically, we reduce the image size to 32x32, without preserving the original aspect ratio. This resizing approach yields good results for images with resolutions ranging from 12MP to 48MP. Generally, the smaller the scaling, the faster the DCT, but it also increases the likelihood of the program making incorrect judgments.

In this case, we employ bilinear interpolation5 for image scaling. Among the commonly used image scaling algorithms, bicubic interpolation offers greater interpolation accuracy but comes with higher computational complexity. Nearest-neighbor interpolation is faster but produces coarser results. Bilinear interpolation strikes a balance between speed and quality, making it a good choice.

Assuming we want to interpolate an image:

- let's assume the original image $I$ has a size of $R_{in}\times C_{in}$, and the target image $J$ has a size of $R_{out}\times C_{out}$.

- Calculate the scaling factors for the rows ($s_r = R_{in} / R_{out}$) and columns ($s_c=C_{in} / C_{out}$).

- For each pixel $(r', c')$ in the target image, where $r'$ and $c'$ are the coordinates in the target image ($0\leq r'<R_{out}$, $0\leq c'<C_{out}$), perform the following steps:

- Compute the corresponding position $(r, c)$ in the original image, where $r =(r'+\frac12)\times s_r-\frac12$, $c=(c'+\frac12)\times s_c-\frac12$. The offset of $\frac12$ aligns the pixels at the corners of the input and target images.

- Determine the positions of the four neighboring pixels in the original image: top-left $(r_0, c_0) = (\lfloor r\rfloor, \lfloor c\rfloor)$, top-right $(r_0, c_1) = (\lfloor r\rfloor, \lceil c \rceil)$, bottom-left $(r_1, c_0) = (\lceil r\rceil, \lfloor c\rfloor)$, and bottom-right $(r_1, c_1) = (\lceil r\rceil, \lceil c\rceil)$.

- Calculate the offsets of the original pixel relative to the top-left pixel: $\Delta r=r -r_0$, $\Delta c = c - c_0$.

- Perform linear interpolation in both directions, calculating $f_1 = (1 - \Delta r)(1 - \Delta c)$, $f_2=\Delta r (1 - \Delta c)$, $f_3=(1 - \Delta r) \Delta c$, $f_4=\Delta r \Delta c$.

- The final interpolated value for that pixel is $J(r',c')=I(r_0,c_0)f_1+I(r_1,c_0)f_2+I(r_0,c_1)f_3+I(r_1,r_1)f_4$.

- Repeat step 3 for all pixels in the target image.

By using bilinear interpolation, we can scale the image to the desired size.

Related code: BilinearInterpolation.swift.

DCT

In the one-dimensional Discrete Cosine Transform (DCT), $N$ real numbers $x_0, ..., x_{N-1}$ are transformed into $N$ real numbers $X_0, ..., X_{N-1}$ through the following equation:

$$X_k = \sum_{n=0}^{N-1}x_n\cos\left(\frac\pi N(n+\frac12)k\right)\quad k=0,…,N-1$$

Next, the $X_0$ term is multiplied by $\frac{1}{\sqrt N}$, and the remaining terms are multiplied by $\frac{\sqrt2}{\sqrt N}$, ensuring the resulting DCT matrix is orthogonal. It can be observed that the time complexity of the one-dimensional DCT is $O(N^2)$.

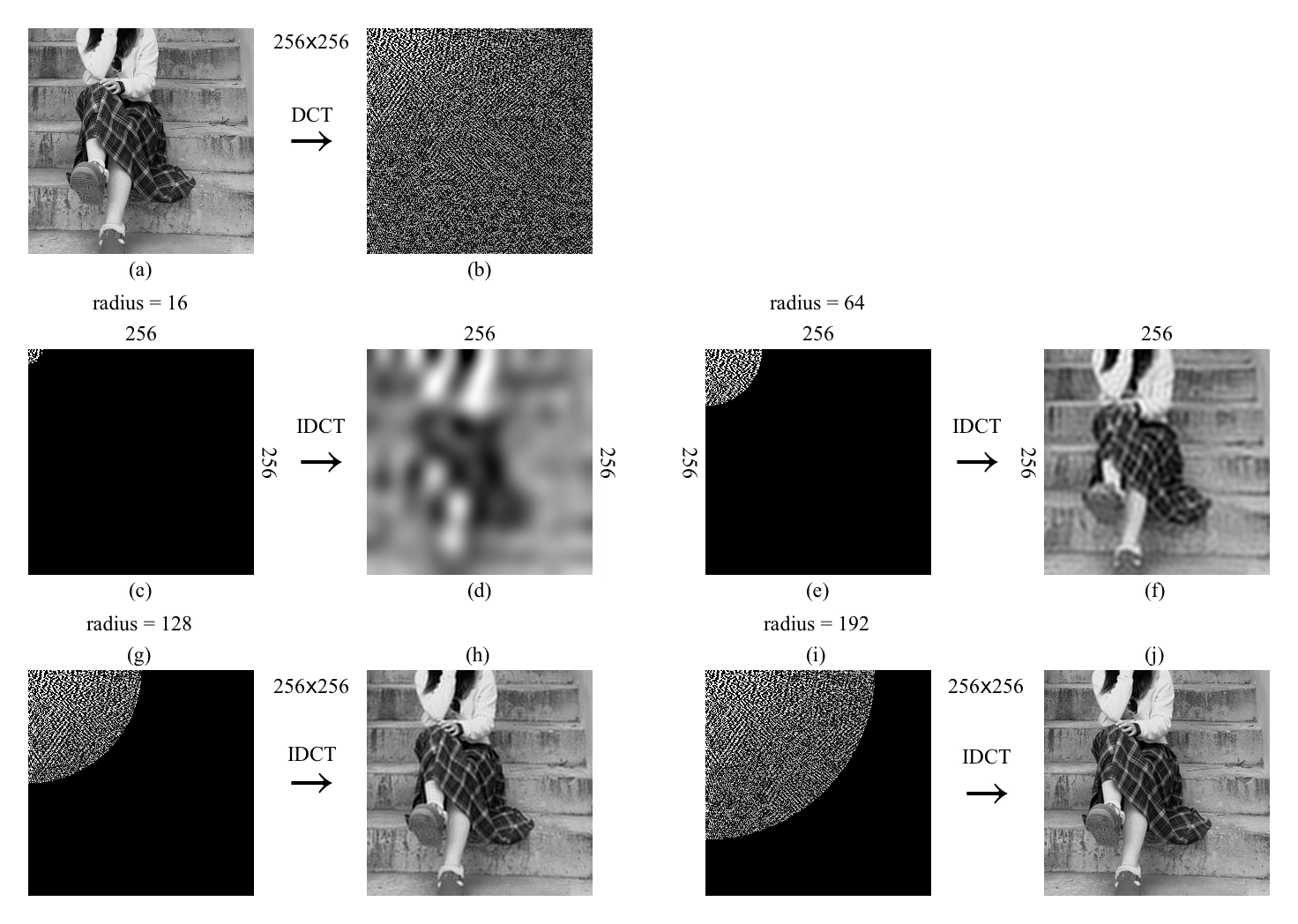

However, a one-dimensional DCT is not enough for image data. Fortunately, based on the properties of summation symbols6, we can first apply DCT to each row of the image matrix, and then apply DCT to each column. This process completes a two-dimensional DCT. Assuming the size of the image matrix is $N \times M$, the time complexity of the two-dimensional DCT is $O(N^2M^2)$.

Related code: DiscreteCosineTransform.swift.

Construct the hash value

After the aforementioned calculations, we obtain a $32\times32$ DCT matrix for the image, which encodes the frequency information of the scaled image. We only keep the top-left $8\times8$ matrix, which represents the low-frequency information in the image.

Next, we compute the average of this smaller matrix, excluding the first element. The first element has a significant numerical difference compared to the others and can disrupt the overall average of the matrix. Thus, we calculate the average of the remaining $63$ elements.

The final step is to construct a hash value by setting a 64-bit unsigned number. The value of each bit $i$ depends on whether the $i$-th element in the DCT low-frequency information matrix is greater than or equal to the average value.

By comparing the Hamming distance7 between the hash values of different images, we can determine whether they are similar. The smaller the Hamming distance between the hash values, the more similar the images. Based on my (preliminary) testing on a database of over 20,000 images, a Hamming distance of less than or equal to 5 appears to be a reasonable threshold. (Due to time constraints, I was unable to conduct extensive testing on different parameters of the algorithm in this context.)

The next figure illustrates the process of our program operating on three example images.

References

1. https://www.apple.com/macos/ventura/features/ ↑

2. ZAUNER, Christoph. Implementation and benchmarking of perceptual image hash functions. 2010. ↑

3. MENEZES, Alfred J., et al. Handbook of Applied Cryptography. CRC Press, 1996. ↑

4. PENARD, Wouter; VAN WERKHOVEN, Tim. On the secure hash algorithm family. Cryptography in context, 2008, 1-18. ↑

5. GONZALES, R.; WOODS, R. Digital Image Processing. Image, 2008. ↑

6. GRAHAM, Ronald L., et al. Concrete mathematics: a foundation for computer science. Computers in Physics, 1989. ↑

7. https://en.wikipedia.org/wiki/Hamming_distance ↑

8. https://www.hackerfactor.com/blog/index.php?/archives/432-Looks-Like-It.html